Color Matching and Illumination Estimation for Urban Scenes

Abstract

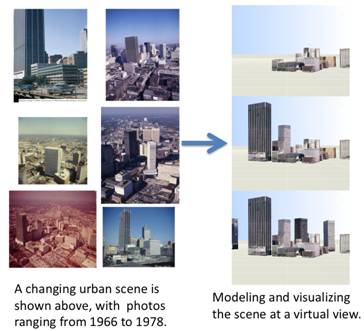

Photographs taken of the same scene often look very different, due to various conditions such as the time of day, the camera characteristics, and subsequent processing of the image. Prime examples are the countless photographs of urban centers taken throughout history. In this paper we present an approach to match the appearance between photographs that removes effects such as different camera settings, illumination, fading ink and paper discoloration over time, and digitization artifacts. Global histogram matching techniques are inadequate for appearance matching of complex scenes where background, light, and shadow can vary drastically, making correspondence a difficult problem. We alleviate this correspondence problem by registering photographs to 3D models of the scene. In addition, by estimating the calendar date and time of day, we can additionally remove the effect of drastic lighting and shadow differences between the photographs. We present results for the case of urban scenes, and show that our method allows for realistic visualizations by blending information from multiple photographs without color-matching artifacts.

ICCV Workshop, 2009 Full paper (PDF)

Active Lighting for Video Conferencing

Abstract

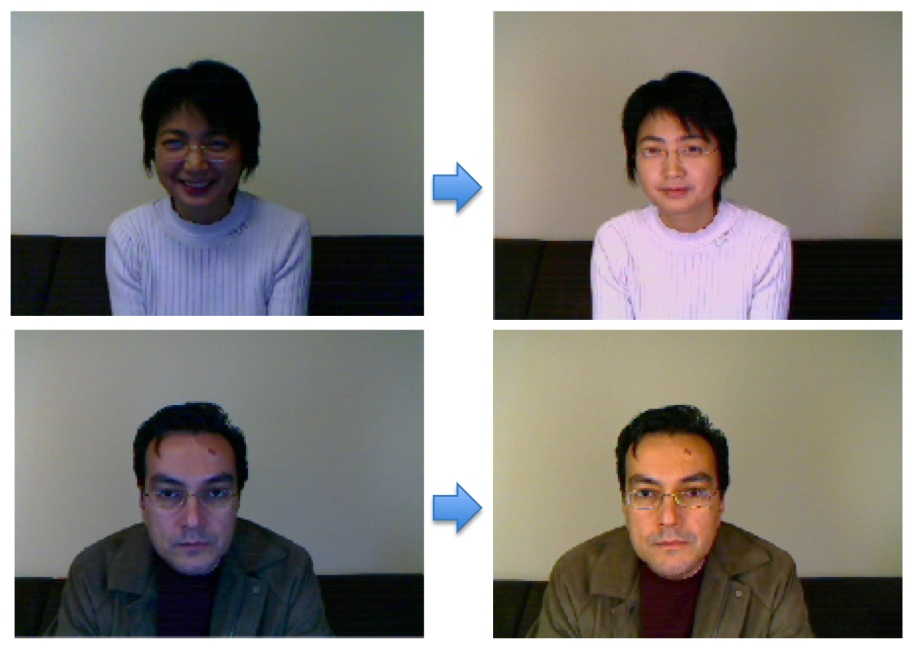

In consumer video conferencing, lighting conditions are usually not ideal thus the image qualities are poor. Lighting affects image quality on two aspects: brightness and skin tone. While there has been much research on improving the brightness of the captured images including contrast enhancement and noise removal (which can be thought of as components for brightness improvement), little attention has been paid to the skin tone aspect. In contrast, it is a common knowledge for professional stage lighting designers that lighting affects not only the brightness but also the color tone which plays a critical role in the perceived look of the host and the mood of the stage scene. Inspired by stage lighting design, we propose an active lighting system which automatically adjusts the lighting so that the image looks visually appealing.

IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), vol.19, no.12, pp.1819-1829, Dec 2009 Full paper (PDF)

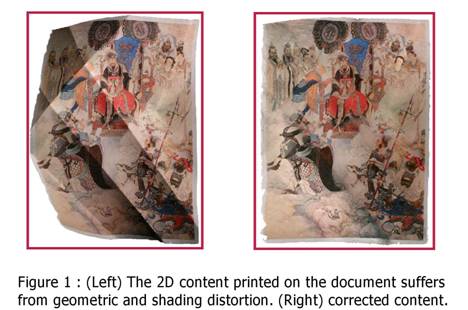

Geometric and Photometric Restoration of Distorted Documents

Abstract

We present a system to restore the 2D

content printed on distorted documents. Our system works by acquiring a 3D scan

of the document's surface together with a high-resolution image. Using the 3D

surface information and the 2D image, we can ameliorate unwanted surface distortion

and effects from non-uniform illumination. Our system can process arbitrary geometric distortions, not

requiring any pre-assumed parametric models for the document's geometry. The

illumination correction uses the 3D shape to distinguish content edges from

illumination edges to recover the 2D content's reflectance image while making

no assumptions about light sources and their positions. Results are shown for

real objects, demonstrating a complete framework capable of restoring geometric

and photometric artifacts on distorted documents.

The Tenth International Conference on Computer Vision (ICCV

2005) Full paper (PDF) Space-Time Light Field

Rendering Abstract In this paper, we propose a novel framework called space-time light field rendering, which allows continuous exploration of a dynamic scene in both space and time. Compared to existing light field capture/rendering systems, it offers the capability of using unsynchronized video inputs and the added freedom of controlling the visualization in the temporal domain, such as smooth slow motion and temporal integration. In order to synthesize novel views from any viewpoint at any time instant, we develop a two-stage rendering algorithm. We first interpolate in the temporal domain to generate globally synchronized images using a robust spatial-temporal image registration algorithm followed by edge-preserving image morphing. We then interpolate these software-synchronized images in the spatial domain to synthesize the final view. In addition, we introduce a very accurate and robust algorithm to estimatesubframe temporal offsets among input video sequences. Experimental results from unsynchronized videos with or without time stamps show that our approach is capable of maintaining photorealistic quality from a variety of real scenes. For example, the figures in the right column are synthesized using ST-LFR, which are much smoother. IEEE Transactions on Visualization and Computer graphics (TVCG), vol.13, no.4, pp.697-710, July 2007 Full paper (PDF) Robust and Accurate Visual Echo Cancelation in a Full-duplex

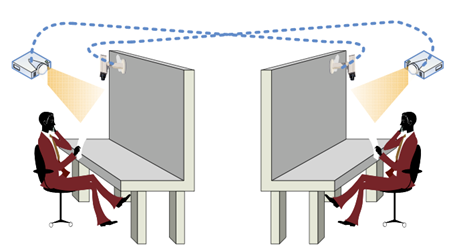

Projector-camera System Abstract In a typical remote collaboration

setup, two or more projector-camera pairs are

“cross-wired”, as shown in the Figure above, to form a

full-duplex system for two-way communication. Images from the projector are

mixed with real objects (such as papers with

writings) to create a shared space. Therefore the camera will capture an image

in which the projected image is also embedded. If we simply send the image for

display on the other end, there could be a feed- back loop

that will distort the projected image, shown in the right image below. This is analogous to the audio

echo effect in a full-duplex phone system. We

developed a comprehensive set of techniques to address the “visual echo”

problem in a full-duplex projector-camera system. CVPR Workshop, 2006 Full paper (PDF)