interfaces

what is an interface?

interface

border zones

human-computer, human-human, computer-computer

points of interaction

interaction types

ways a person interacts with a product

instructing, conversing, manipulating, exploring, and responding

helpful for formulating conceptual models without implementation

instructing

where users issue instructions to system

keyboard shortcuts, menus, command line interfaces

when to use instructing

supports many activities

quick and efficient

repeated actions on multiple objects

complexity challenges

conversing

having a conversation with a system

respond similar to human-human interaction

when to use conversing

help/assistive facilities

chatbots

speech-based interfaces

familiar with less complexity, less discoverability

single action, not repetition

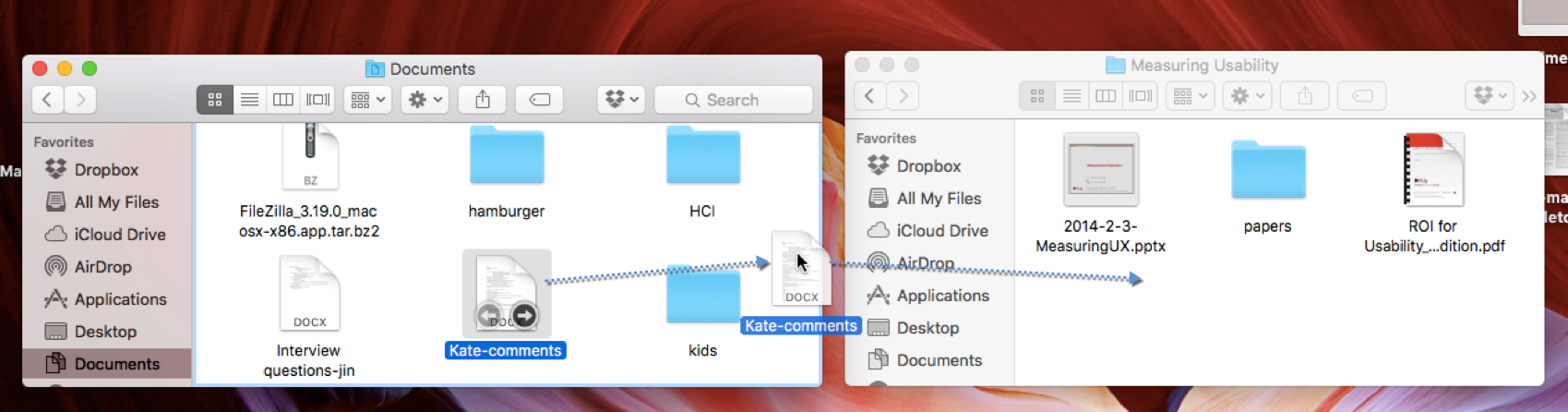

manipulating

manipulating objects

capitalize on knowledge of physical world

direct manipulation

analogous to interaction with physical objects

continuous representation

rapid reversible incremental actions

immediate feedback

physical actions instead of issuing text commands

exploring

moving through virtual or physical environments

exploit knowledge of navigating existing spaces

responding

system taking initiative

alert, describe, or show the user

types of interfaces

interface types

common or traditional

command-line, GUI, multimedia, web-based

surface

pen-based, multi-touch

reality-based

tangible, virtual and augmented reality

body-based

gesture, haptic, gaze, wearables, brain-computer

agents

voice, robots and drones, smart, appliances

common or traditional interfaces

command-line interfaces

type commands

keyboard shortcuts

more efficient and quicker for some tasks

low discoverability

graphical user interfaces (GUI)

WIMP: windows, icons, menus, and pointer

address learnability issues

recognition over recall

windows

overcome physical constraints of displays

multiple windows open

task switching

scrolled, stretched, overlapped, opened, closed, and moved

icons

depict applications, objects, commands, tools, and status

recognition: easier to learn and remember than text labels

signifiers and feedback

similar, analogical, or arbitrary

convention

menus

list of options

top to bottom: frequency

group by similarity

flat, expanding, mega, collapsible, contextual

flat menus

one level

small number of items

expanding menus

more options shown by incremental revealing

easier navigation

mega menus

2D drop-down layout

view lots without scrolling

take up large visual space

good for browsing

collapsible menus

accordian menus

collapsing content

provide structure overview

multimedia interfaces

combine different media

images, text, video, sound, animation

most interfaces

training, educational, and entertainment

website interfaces

early forms text-based

usability versus attractiveness

desktop versus mobile

responsive web design

automatically resize, hide, and reveal interface elements

specify a viewport

<meta name="viewport" content="width=device-width, initial-scale=1.0">

vw / vh: % of viewport width and height

<h1 style="font-size:10vw">Hello World</h1>

surface-based interfaces

touch interfaces

single- and multi-touch

smartphones and tablets

tabletops and digital whiteboards

gestures

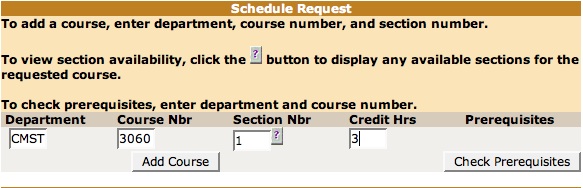

pen-based interfaces

write, draw, command with a pen

take advantage of well-honed skills

tablets

annotation

pen + touch interfaces

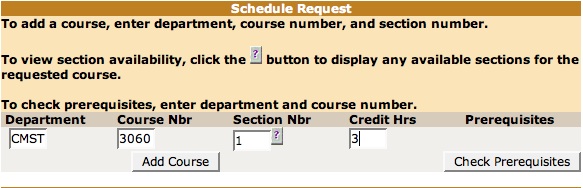

surface-based interface for

class scheduling?

reality-based interfaces

virtual reality

computer-generated graphic simulations

feel virtually real when interacting

different view points

fidelity

entry cost and requirements

augmented reality

superimposing virtual on to physical reality

mobile devices, headsets, head-up displays (HUDs)

what type of augmentation, when, where

information polluting

tangible interfaces

use of physical objects and sensors

no single locus of control

affordances

education

embodied cognition

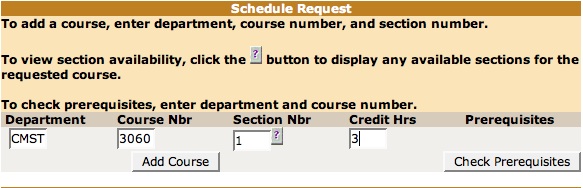

reality-based interface for

class scheduling?

body-based interfaces

gesture-based systems

moving arms and hands to communicate

computer vision

machine learning

haptic interfaces

provide tactile feedback

vibrations and force

gaze-based interfaces

gaze: eye movements

very fast

provides implicit context

wearables

worn on the body

smart watches

sensors

devices, but also clothing

brain-computer interfaces

communicate interaction via brainwaves

electrodes on the scalp to detect firing neurons

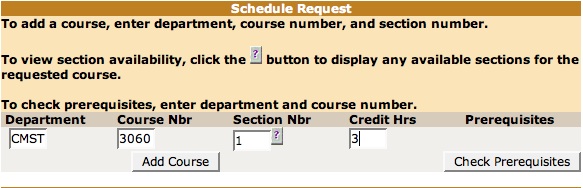

body-based interface for

class scheduling?

agent interfaces

voice user interfaces

using voice to interaction

command or conversing interactions

speech recognition

voice assistants

robots and drones

manufacturing

search and rescue

domestic robots

joysticks and controllers

follow human

smart interfaces

agency

artificial intelligence

context-aware

improve efficiency and cost effectiveness

human-building interaction

appliances

everday machines in the home

do something specific quickly

connectivity

agent-based interface for

class scheduling?

choosing what type of interface to use?

user needs

main driving factor

how do we know?

we often don't

questions?

reading for next class

Chapter 8: Data Gathering

Interaction Design: Beyond Human-Computer Interaction