evaluation: inspections, analytics, models

indirect methods

knowledge codified in heuristics

data collected remotely

models that predict users' performance

inspections

experts

knowledgeable about interaction design

needs and typical behaviors of users

heuristic evaluation

walk-throughs

heuristic evaluation

evaluate guided by usability principles

heuristics

high-level design principles

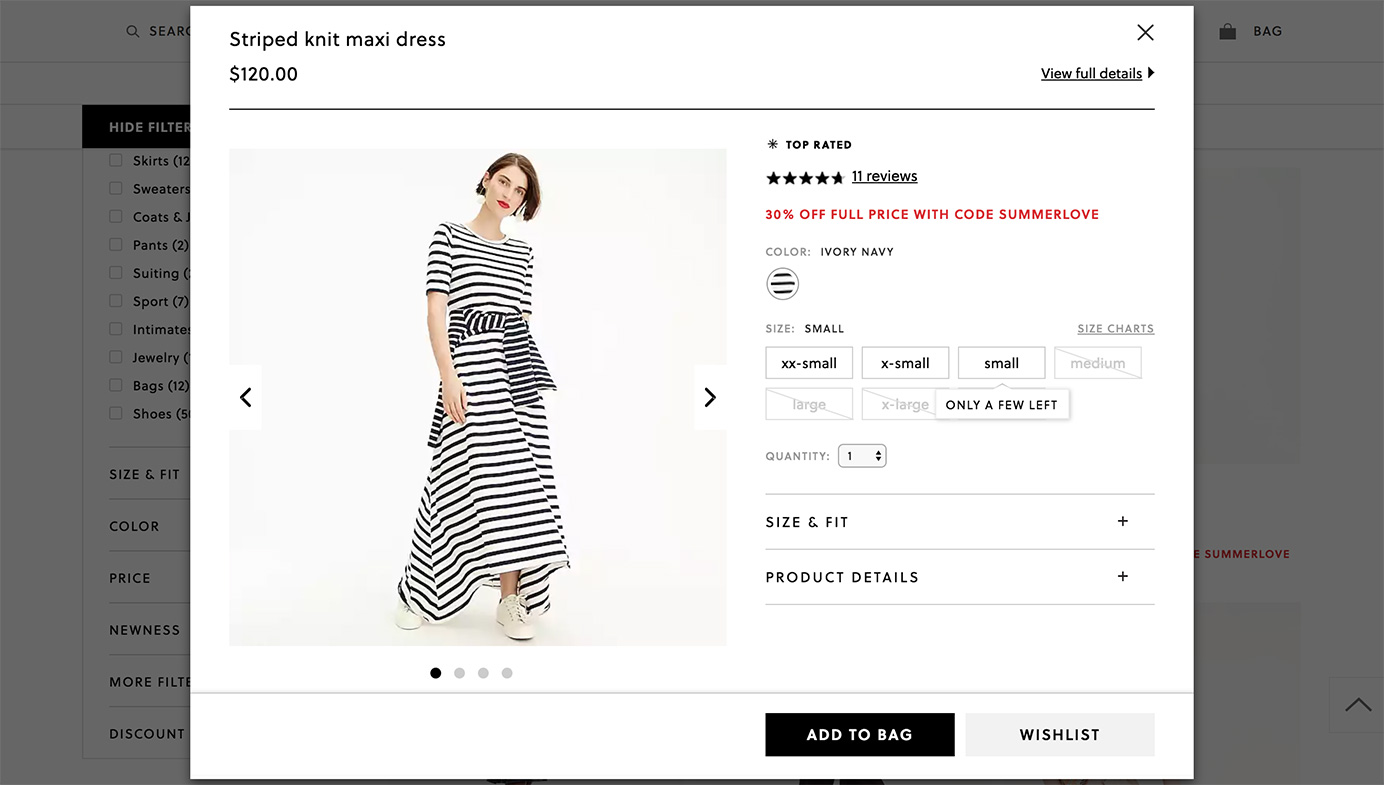

heuristic:

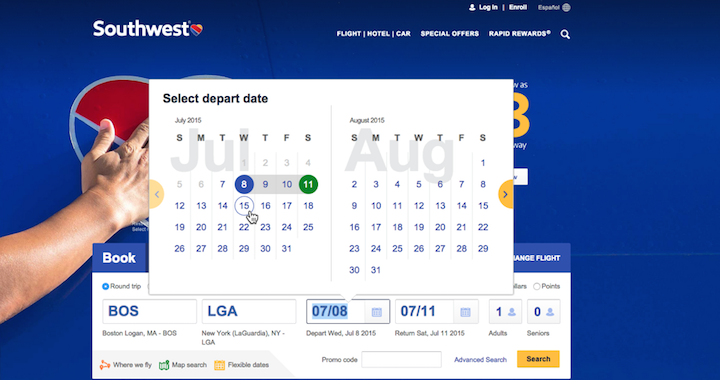

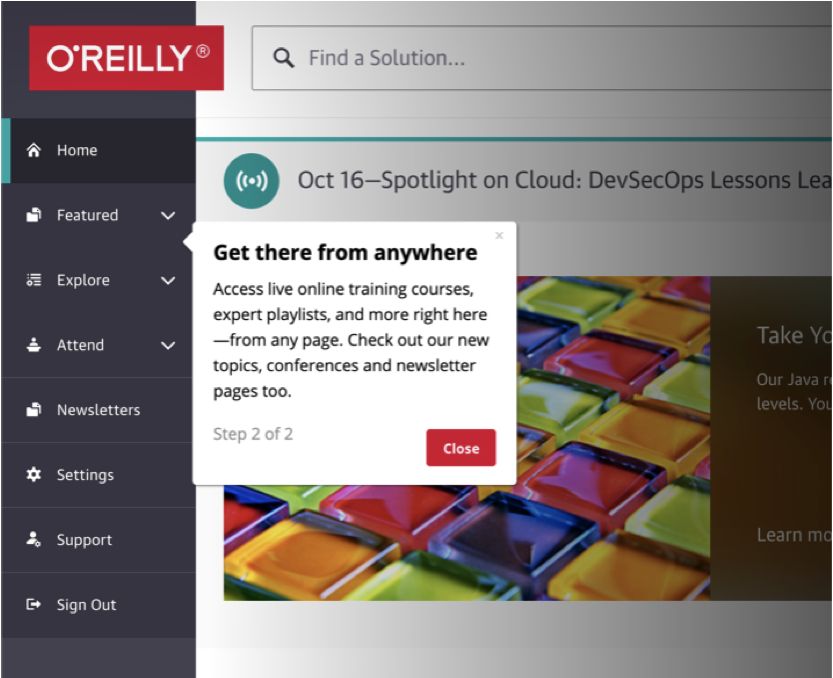

visibility of system status

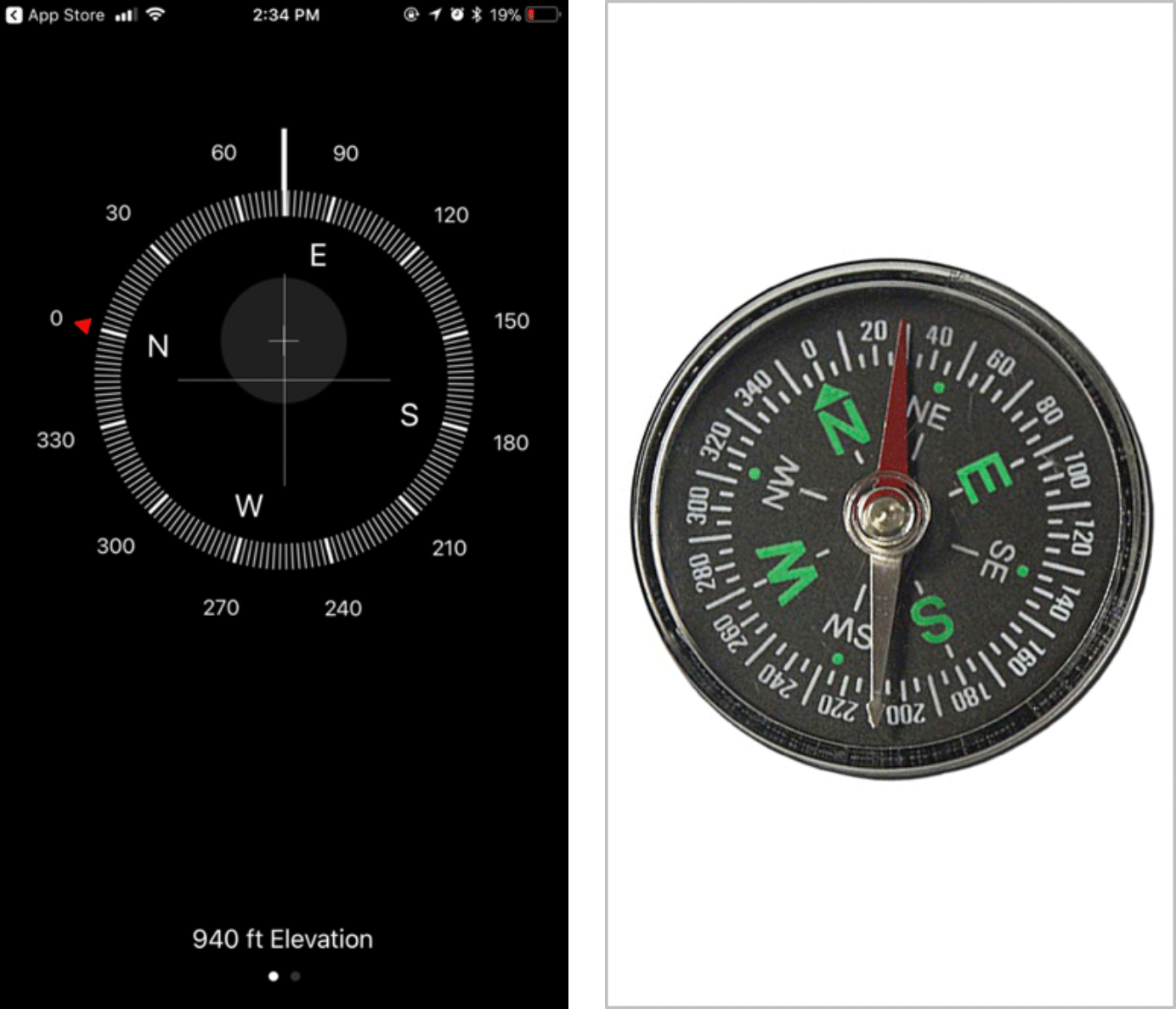

heuristic: match between system and real world

heuristic:

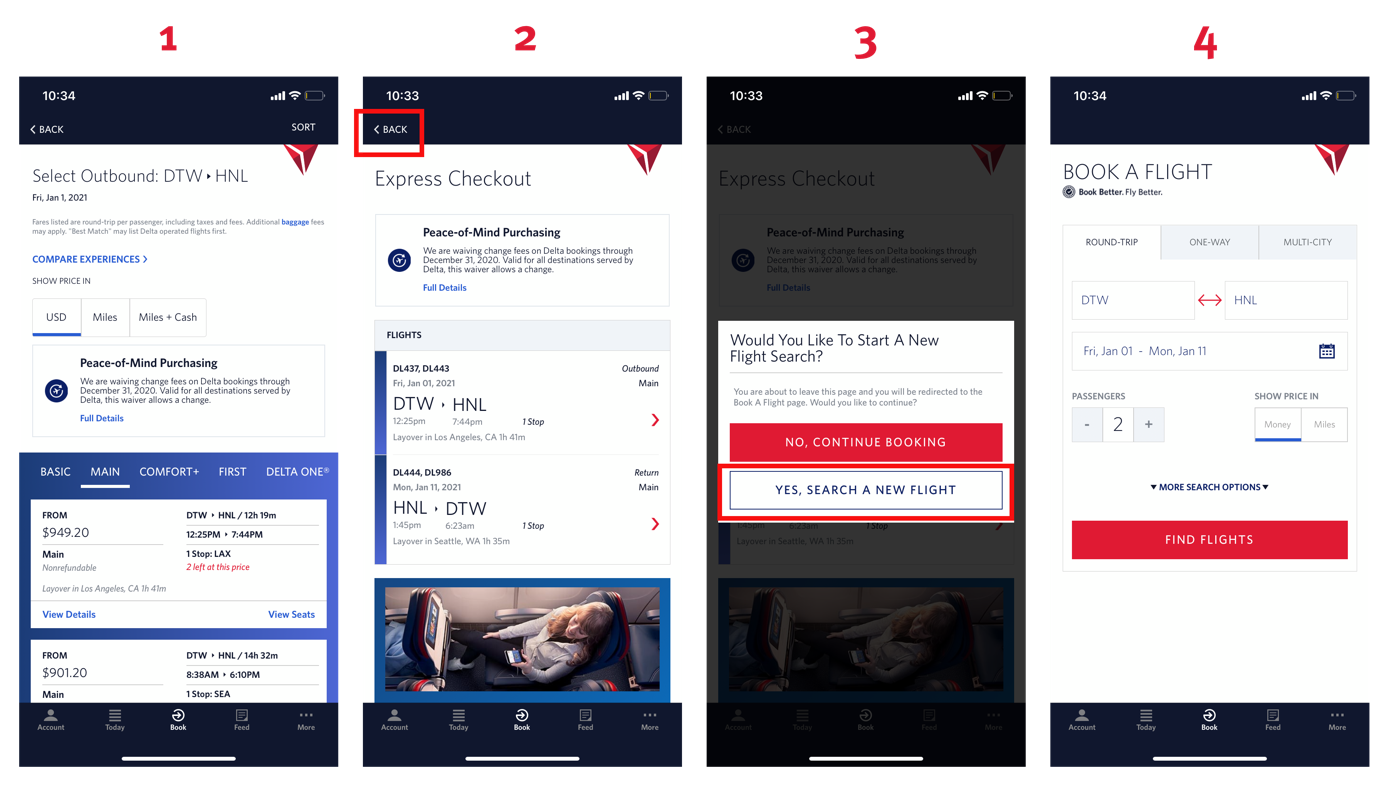

user control and freedom

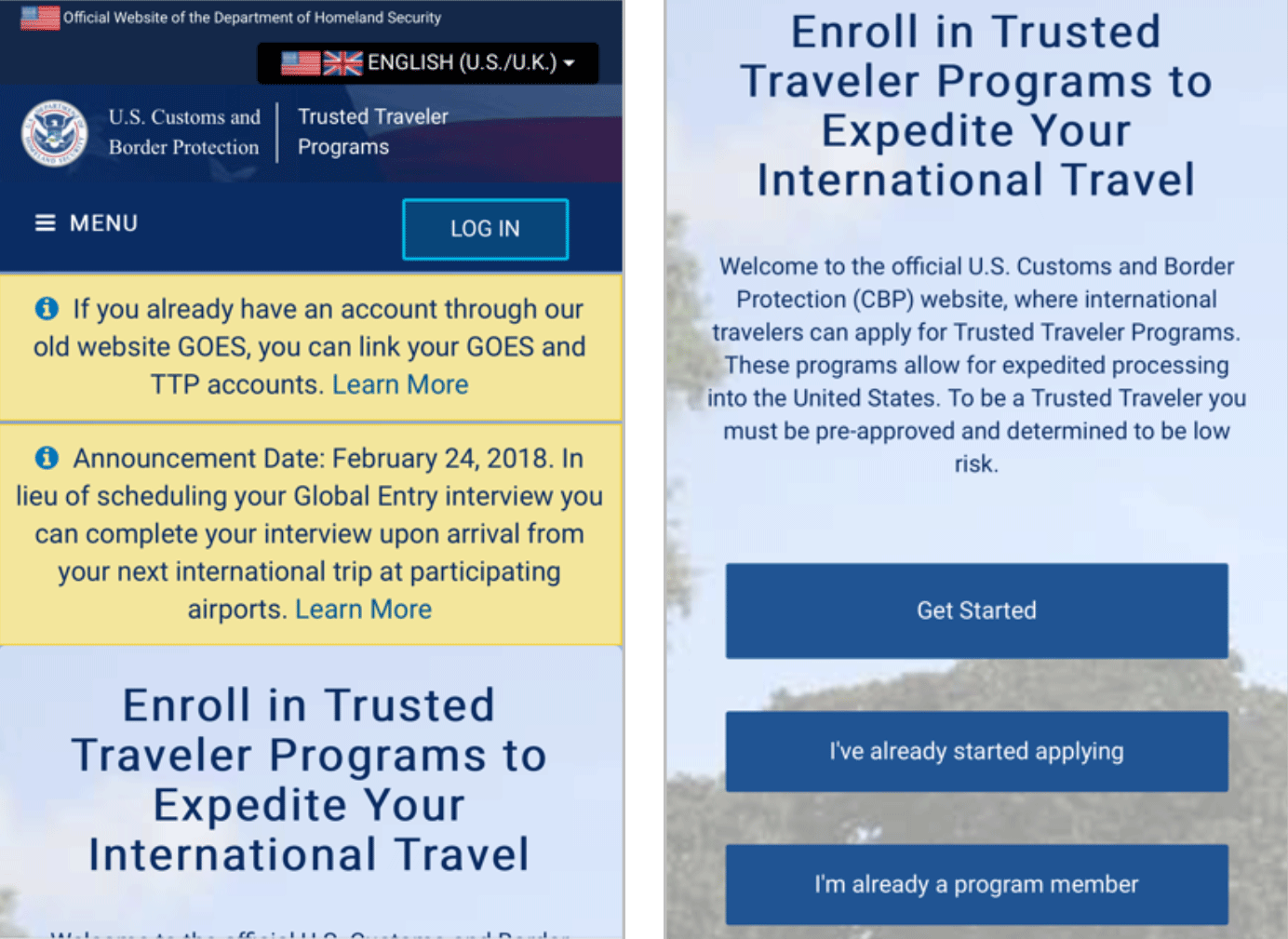

heuristic:

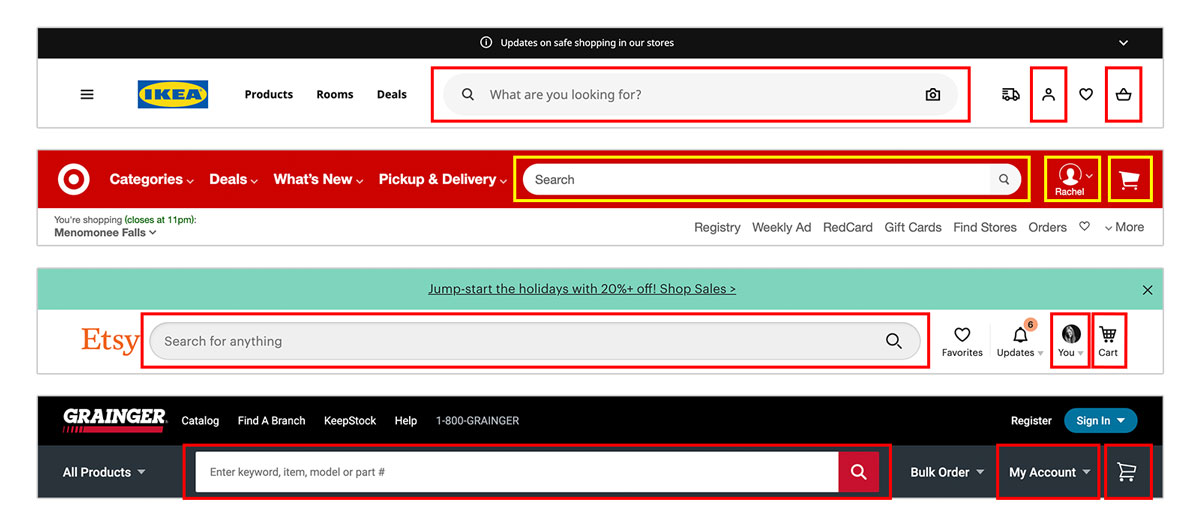

consistency and standards

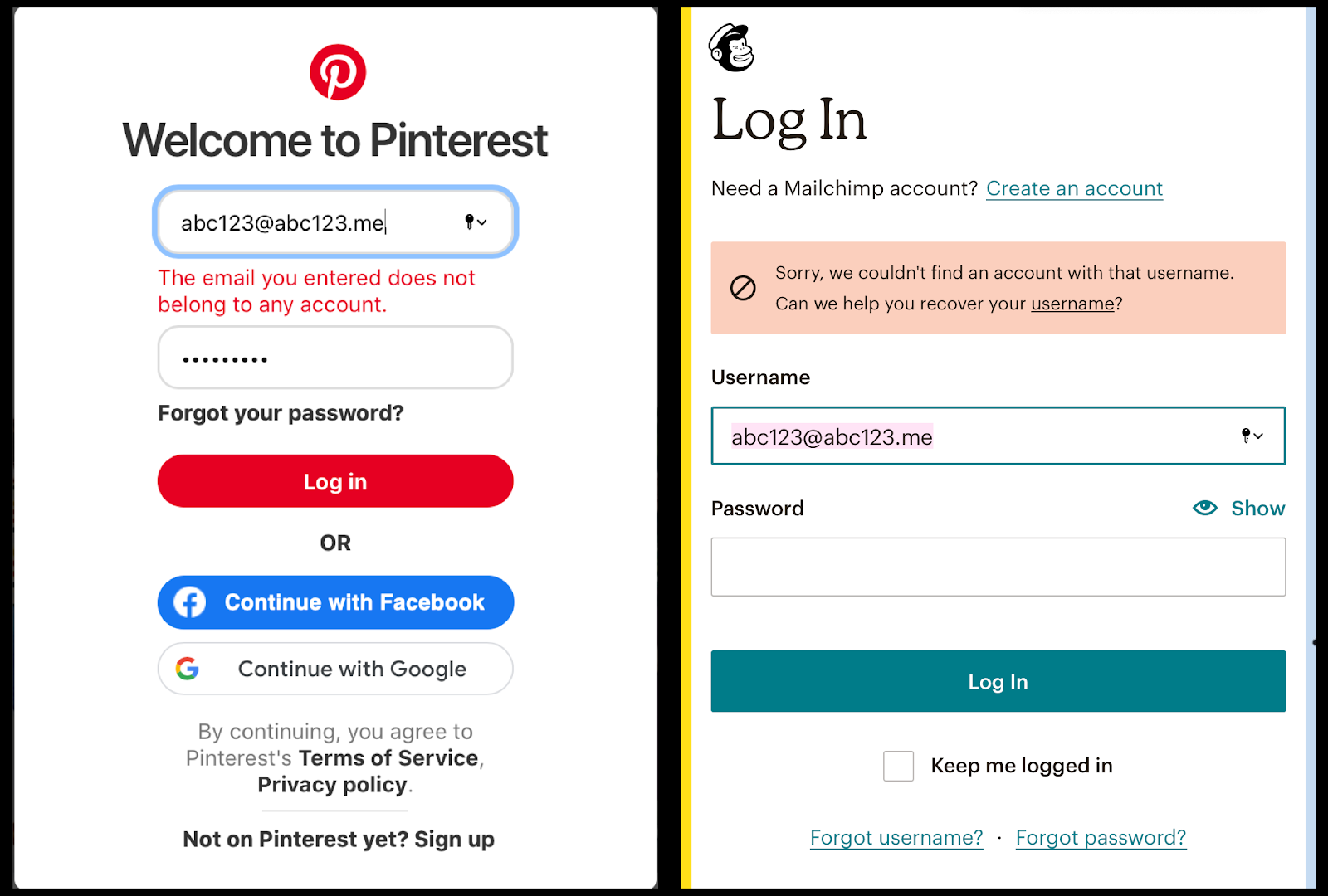

heuristic: error prevention

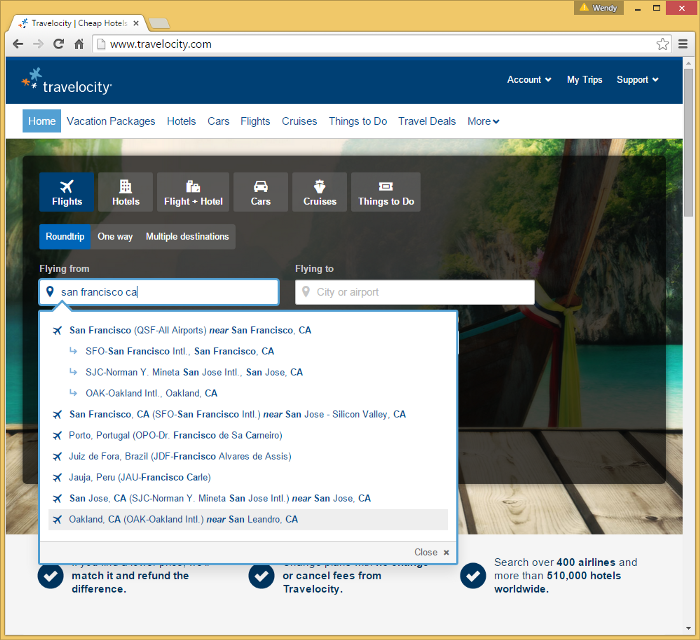

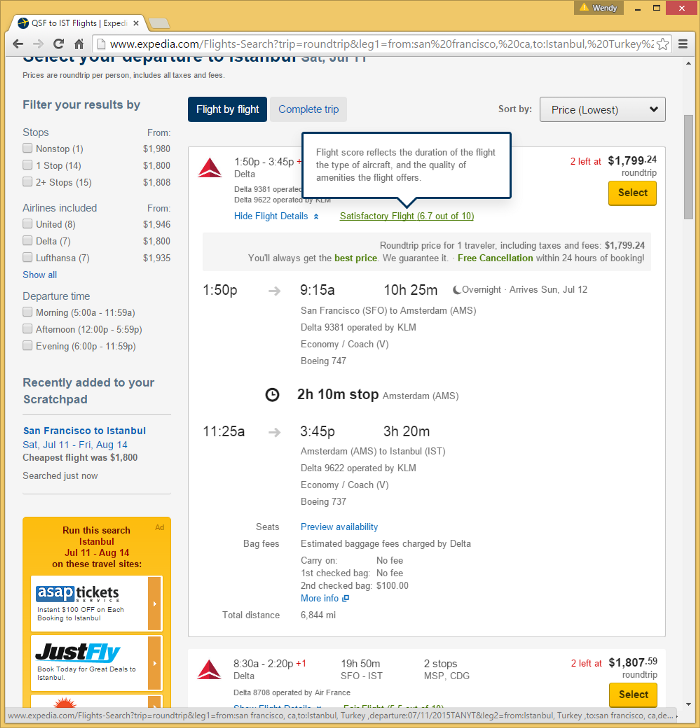

heuristic: recognition rather

than recall

heuristic: flexibility and

efficiency of use

heuristic: aesthetic and

minimalist design

heuristic: help users recognize, diagnose, & recover from errors

heuristic: help and documentation

eight golden rules

strive for consistency

seek universal usability

offer informative feedback

design dialogs to yield closure

prevent errors

permit easy reversal of actions

keep users in control

reduce short-term memory load

accessibility heuristics

web content accessibility guidelines (WCAG)

perceivable: alternative representations

operable: navigation and input modalities

understandable: readable text, operate in predictable ways

robust: compability with current and future tools

custom heuristics

core ones too general

tailor core heuristics

other design guidelines

market research and results from studies

requirements documents

developing heuristics

convert design guidelines

translate guidelines into questions

revising heuristics: overlap

which and how many?

depends on goals of evaluation

often 5-10

more: difficult to manage

less: not sufficiently discriminating

how many evaluators?

suggested: 3-5 identify 75%

fewer with more experienced evaluators

knowledgeable about intended users

heuristic evaluation process

three stages

briefing session

evaluation period

debriefing session

evaluation period

two passes

first pass: flow of the interaction

second pass: focus on specific interface elements

tasks: functional vs mock-ups

debriefing session

discuss findings together

prioritize problems and suggest solutions

disagreement among researchers

walk-throughs

walking through a task with the product

noticing problematic features

types: cognitive and pluralistic

cognitive walk-throughs

simulating how users problem solve when interacting

cognitive perspective

easy of learning: exploration

cognitive walk-throughs steps

identify characteristics of typical users

description, mock-up, or prototype

clear sequence of actions to complete task

designer and UX researchers do analysis

walk through action sequence

record critical information while walking through

revise design

pluralistic walk-throughs

users, developers, and usability researchers work together

each person steps through a task scenario

discuss usability issues at each step

discuss all suggested actions

advantage: detailed focus on users' tasks, safety-critical systems

limitations: time and scheduling

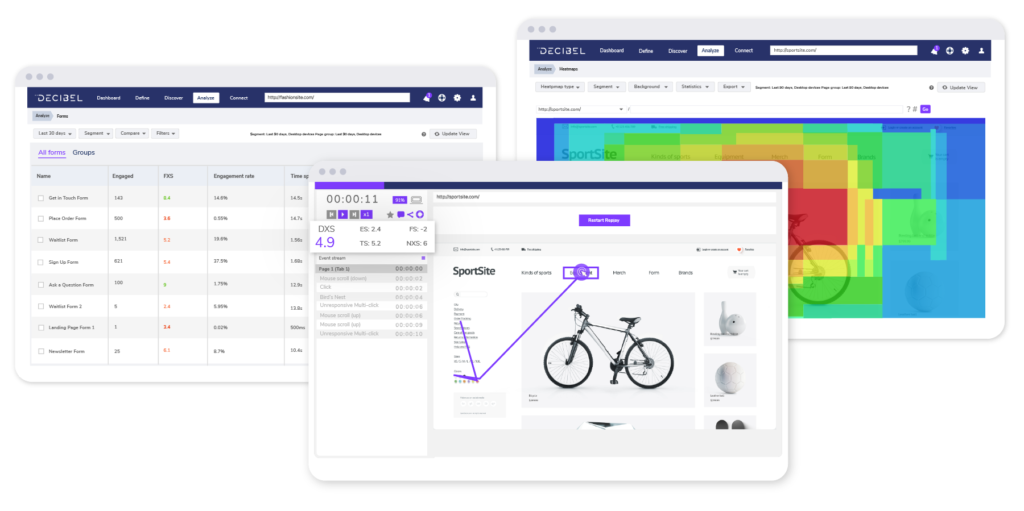

analytics and a/b testing

logging interaction

record user interactions automatically

key presses, mouse movements, time spent

advantage: unobtrusive

ethical concerns

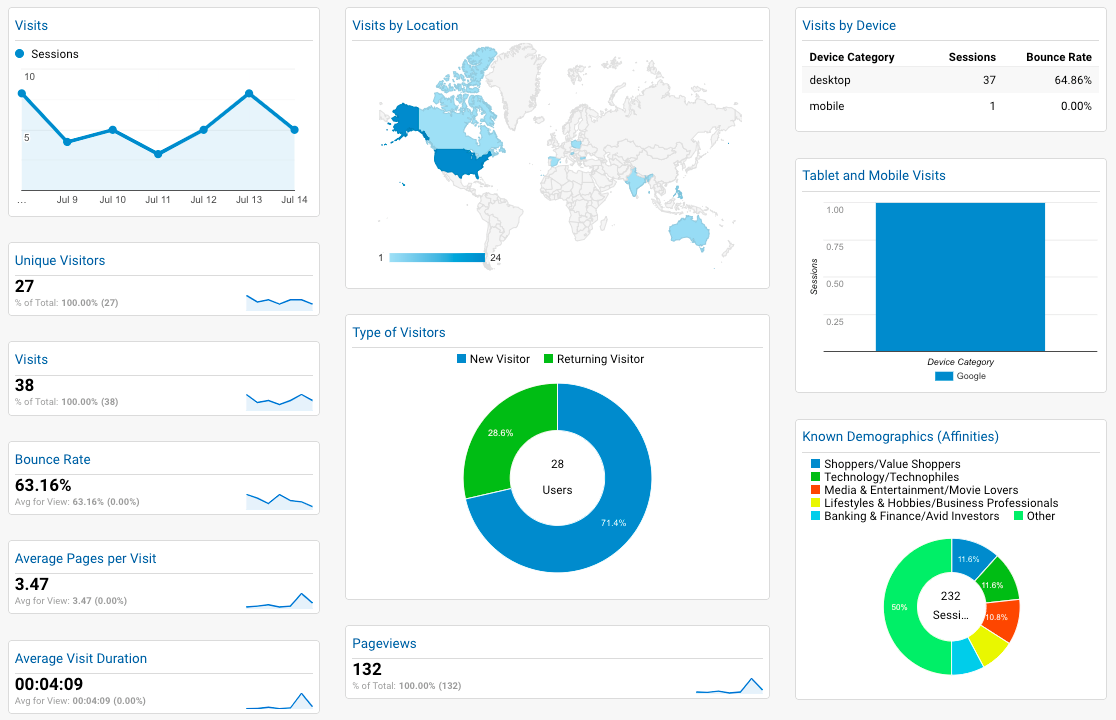

web analytics

interaction logging for websites

attract and retain customers

trace activity of users

how many people, how long they stayed, pages visited

provide "big picture" overview

types of web analytics

on-site and off-site analytics

on-site: measure visitor behavior

off-site: measure a website's visibility

non-transactional products

information and entertainment websites

hobby, music, games, blogs, and personal websites

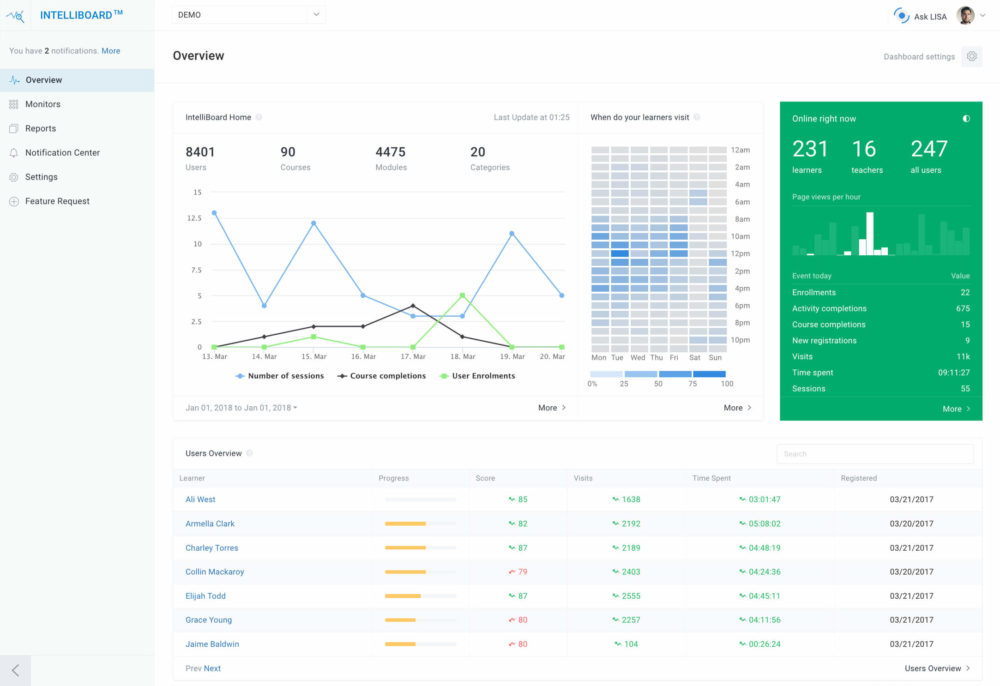

learning

learning analytics

evaluate learner activities in MOOCs

what points do learners drop out and why?

visual analytics

thousands or millions of data points displayed

manipulate visually

social network analysis

google analytics

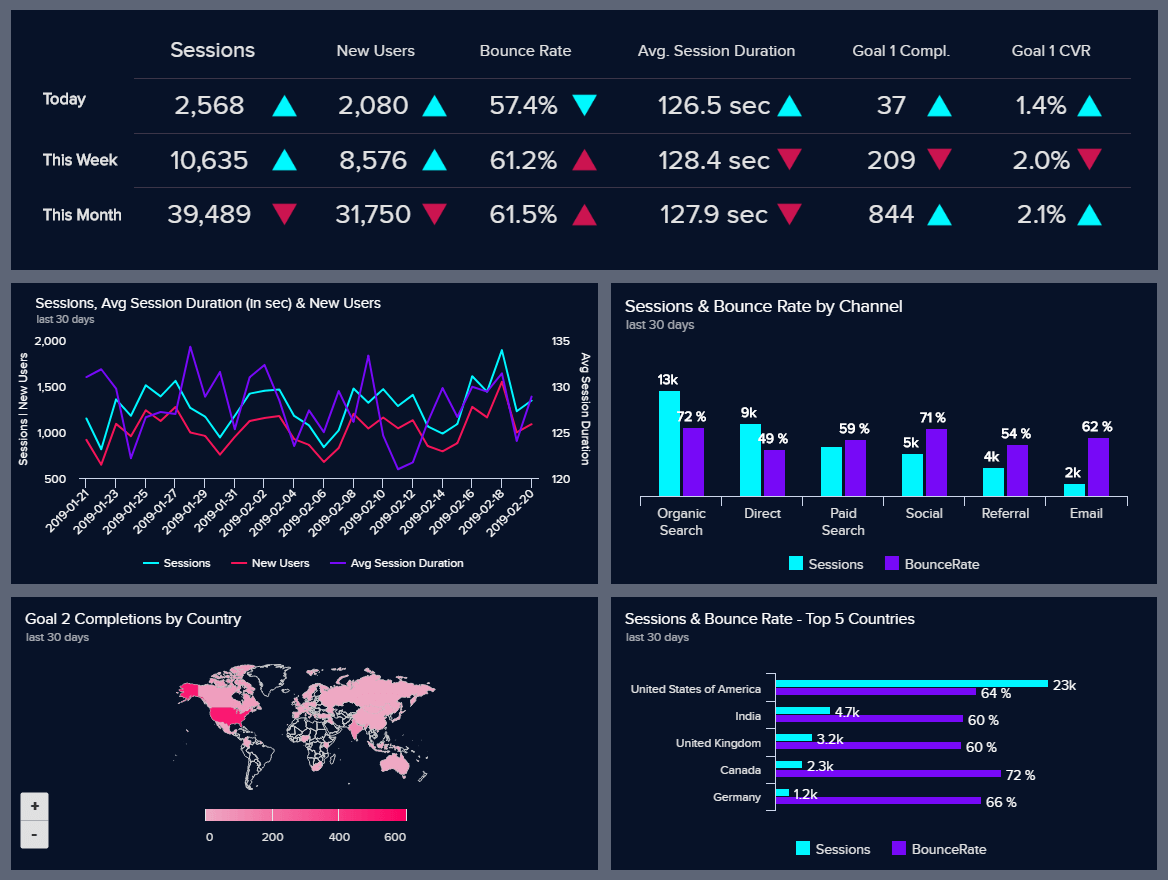

a/b testing

large-scale controlled experiment: 100s to 1000s of participants

between-subjects design: two groups of users using two different designs

doing a/b testing

identify a variable of interest

group A: served existing design

group B: served new design

dependent variable

time frame

statistical analysis

a/b testing for websites

data-driven approach for evaluating web and social media design

first do a/a testing

no statistical difference

ensure populations are random and conditions similar

caution: a/b testing

confirm testing what you expect in detail

subtle differences can skew results

features involving payments by users can have powerful effects

ethical consideration

predictive models

predictive modeling

formulas to derive measures of user performance

estimates of the efficiency of different systems

fitts' law

predicts time it takes to reach a target using a pointing device

speed and accuracy when moving towards a target

interaction design: time it takes to point at a target

size and distance

decide where to locate buttons and how big

fitts' law

\[\begin{aligned} T & = k \log_2(D/S+1.0)\\ \\ \text{where } T & = \text{time to move the pointer to target}\\ D & = \text{distance between the pointer and the target}\\ S & = \text{size of the target}\\ k & \text{ is a constant of approximately 200 ms/bit} \end{aligned} \]uses of fitts' law

time to locate object is critical

where to locate elements on screen in relation to each other

mobile devices with limited space

gesture, touch, eye-tracking, game controllers, 3D section in VR

simulating users with motor impairments

questions?

reading for next class

What is Mixed-Reality?

Maximilian Speicher, Brian D. Hall, and Michael Nebeling