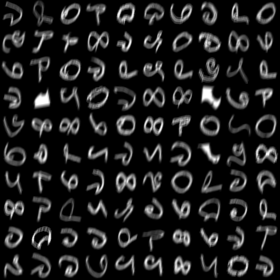

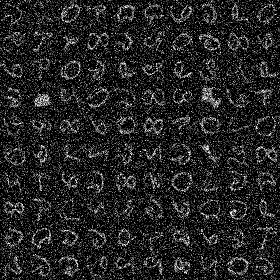

The noisy Bangla dataset is created using the offline Bangla dataset of handwritten digits by adding -

(1) additive white gaussian noise,

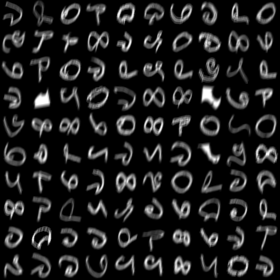

(2) motion blur and

(3) a combination of additive white gaussian noise and reduced contrast to the offline Bangla numeral dataset.

The datasets are available here:

bangla-with-awgn.gz

bangla-with-motion-blur.gz

bangla-with-reduced-contrast-and-awgn.gz

|

|

|

| Noisy Bangla with Additive White Gaussian Noise (AWGN) | Noisy Bangla with Motion Blur | Noisy Bangla with reduced contrast and AWGN |

| train_x | 193890x784 double (containing 193890 training samples of 28x28 images each linearized into a 1x784 linear vector) |

| train_y | 193890x10 double (containing 1x10 vectors having labels for the 193890 training samples) |

| test_x | 3999x784 double (containing 3999 test samples of 28x28 images each linearized into a 1x784 linear vector) |

| test_y | 3999x10 double (containing 1x10 vectors having labels for the 3999 test samples) |